Businesses now a day always consider hiring a data scientist in order to achieve smooth and hassle-free data processing. The terminology ?Data Science? was first introduced to the business world in 2001. Google’s chief economist, Hal Varian presented an insight in 2009. He claimed that the process of reaping a huge amount of data and extracting the accurate value from it is going to shape modern businesses. The modern day’s data ingestion process heavily relies on machine learning algorithms to come up with solutions to complex business problems. These ML algorithms can help today’s businesses in many ways, here is a few of them:

- ? Predicting the future demands of the customers for the best possible inventory deployment.

- Personalizing the customer experience to make them feel more connected.

- ? Improving the capabilities of predicting frauds

- ? Finding out what motivates the consumers and how much they are inclined to.

- It can also help promote brand awareness, reduce financial risks, and above all increasing revenue margins.

In order to achieve these outcomes, data ingestion pipelines play a crucial role. Before discussing how much importance it carries out, its benefits, and how to build your own data pipeline. Let’s first discuss what data ingestion is.

What does data ingestion mean?

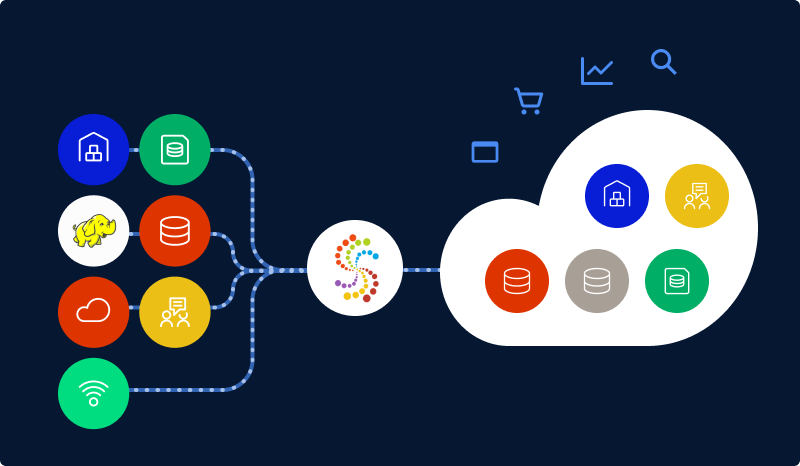

The process of onboarding data from one or more data sources into an application data store is referred to as Data Ingestion. Almost all businesses in every industry go through some sort of data ingestion – whether a small instance of moving data from one application to another or all the way to an enterprise-wide application that deals with the huge amount of data in a continuous stream coming from multiple sources. The destination is usually a data lake, database, or data warehouse. Sources may be almost anything ? including RDBMS, SaaS platform data, S3 buckets, in-house apps, databases, CSVs, or even online streams. Data ingestion is the pillar of any analytics architecture.

Streamed Ingestion: Streamed ingestion comes into the picture while working with data in real-time transactions, event-driven applications. An example of the same will be loading and validating Visa card details which require execution and fraud detection algorithm.

Batched ingestion: This is the most common data ingestion process. In this case, data often come as a batch or group from sources like another database, CSVs, or spreadsheets. While processing the data through batched ingestion certain conditions, logical orderings and schedules needed to be considered to achieve the optimum outcome.

A data ingestion pipeline is the steps involved in moving unprocessed data from a source to a destination. In the lexicon of business intelligence, a source could be an RDBMS, SaaS platform data, S3 buckets, in-house apps, databases, CSVs, or even online streams while the destination is, usually a data lake or a data warehouse. In other words, a data ingestion pipeline moves streaming data and batched data from pre-existing databases and data warehouses to a data lake. Companies with a huge amount of data configure their data ingestion pipelines to structure their data, enabling them to fetch their desired data using SQL queries.

Benefits of Data Pipeline:

First of all extracting data from all the different sources manually and consolidating the same into a spreadsheet or CSVs for analysis is a hazardous job. Mistakes are common in such a process and such mistakes can cause data redundancy. At the same time handling, real-time data would have become a next to impossible task. Data ingestion pipelines consolidate data from different sources into one common destination database at the same time it enables quick data analysis for business insights.

Building Blocks of a Data Pipeline:

A data ingestion pipeline has typically six main elements. They are as follows:

- 1. Source: These are the places where data comes from. These can be other RDBMS, CSVs, CRM platforms, Social media tools, and so on.

- 2. Destination: These are the places where data are going, typically a data lake or a data warehouse.

- 3. Dataflow: Data flows from the data source to the data destination. This flow is referred to as ‘Dataflow’. Two generic approaches of data flow are ETL and ELT.

- 4. Processing: This step decides how data flow should be implemented. Two common methods of extracting data from sources and dumping them into the destination are batch ingestion and stream ingestion.

- 5. Workflow: It refers to the sequence of the jobs that needed to be executed one after another. It typically uses the FIFO structure.

- 6. Monitoring: A data pipeline has to be monitored regularly to reduce data loss and accurate processing of data.

Before Concluding here are two main points to be considered while selecting a data ingestion tool:

- The format of storing data has a direct relation to consuming data later on.

- ? The Optimal ingestion technique as per need.

As the editor of the blog, She curate insightful content that sparks curiosity and fosters learning. With a passion for storytelling and a keen eye for detail, she strive to bring diverse perspectives and engaging narratives to readers, ensuring every piece informs, inspires, and enriches.